AKA “Stable Diffusion without a GPU” 🙂

Currently, the ‘Use CPU if no CUDA device detected’ [1] pull request has not merged. Following the instructions at [2] and jumping down the dependency rabbit hole, I finally have Stable Diffusion running on an old dual XEON server.

[1] https://github.com/CompVis/stable-diffusion/pull/132

[2] https://github.com/CompVis/stable-diffusion/issues/219

Server specs:-

Fujitsu R290 Xeon Workstation

Dual Intel(R) Xeon(R) CPU E5-2670 @ 2.60GHz

96 GB RAM

SSD storage

Sample command line:-

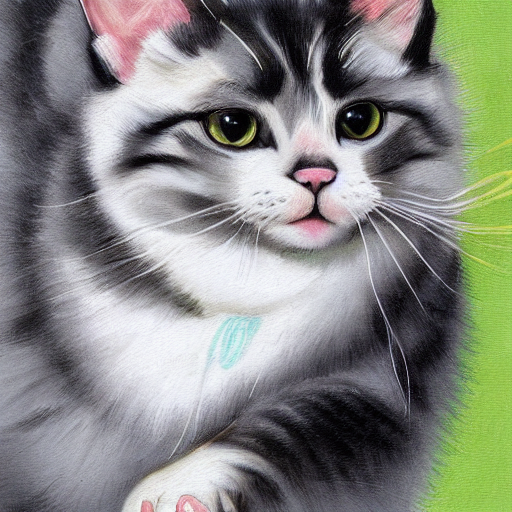

time python3.8 scripts/txt2img.py --prompt "AI image creation using CPU" --plms --ckpt sd-v1-4.ckpt --H 768 --W 512 --skip_grid --n_samples 1 --n_rows 1 --ddim_steps 50Initial tests show the following:-

| Resolution | Steps | RAM (GB) | Time (minutes) |

| 768 x 512 | 50 | ~10 | 15 |

| 1024 x 768 | 50 | ~30 | 24 |

| 1280 x 1024 | 50 | ~65 | 66 |

| 1536 x 1280 | 50 | OOM | N/A |

Notes:

1) Typically only 18 (out of 32 cores) active regardless of render size.

2) As expected, the calculation is entirely CPU bound.

3) For an unknown reason, even with –n_samples and –n_rows of 1, two images were still created (time halved for single image in above table).

Conclusion:

It works. We gain resolution at the huge expense of memory and time.